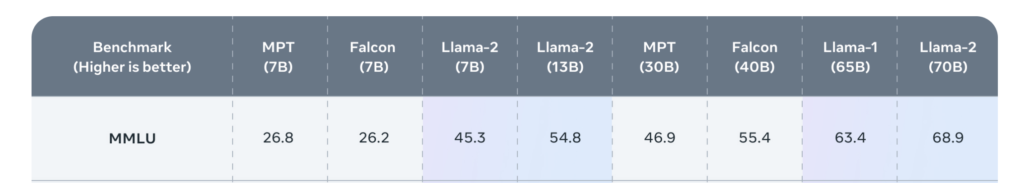

Parmi les nombreux benchmarks retenus pour comparer les performances de Llama 2 à ses concurrents, MMLU est le premier cité dans l’article de présentation du logiciel.

Note de Llama 2 / MMLU

La note obtenue par Llama 2 pour ce benchmark est de 68,9 pour la version 70B, ce qui le situe approximativement au niveau de GPT 3.5 mais loin derrière GPT4 (86.4) qui domine tous les LLM sur ces critères.

Description de MMLU

MMLU (Massive Multitask Language Understanding) is a new benchmark designed to measure knowledge acquired during pretraining by evaluating models exclusively in zero-shot and few-shot settings. This makes the benchmark more challenging and more similar to how we evaluate humans. The benchmark covers 57 subjects across STEM, the humanities, the social sciences, and more. It ranges in difficulty from an elementary level to an advanced professional level, and it tests both world knowledge and problem solving ability. Subjects range from traditional areas, such as mathematics and history, to more specialized areas like law and ethics. The granularity and breadth of the subjects makes the benchmark ideal for identifying a model’s blind spots.

https://paperswithcode.com/dataset/mmlu

Exemple de question dans MMLU (disponible sur HuggingFace) :

question (string)

« Davis decided to kill Adams. He set out for Adams’s house. Before he got there he saw Brooks, who resembled Adams. Thinking that Brooks was Adams, Davis shot at Brooks. The shot missed Brooks but wounded Case, who was some distance away. Davis had not seen Case. In a prosecution under a statute that proscribes any attempt to commit murder, the district attorney should indicate that the intended victim(s) was/were »

choices (sequence)

[ « Adams only. », « Brooks only. », « Case only. », « Adams and Brooks » ]

answer (class label)

1 (B)

Classement de Llama 2 / ses concurrents

| Rank | Model | Average (%) | Parameters (Billions) | Tokens (Billions) | Year | Tags |

|---|---|---|---|---|---|---|

| 1 | GPT-4 | 86.4 | 2023 | few-shot | ||

| (few-shot, k=5) | ||||||

| 2 | Flan-PaLM 2 | 81.2 | 2023 | |||

| (L) | ||||||

| 3 | PaLM 2 | 78.3 | 2023 | |||

| (large) | ||||||

| 4 | Flan-PaLM | 75.2 | 540 | 2022 | fine-tuned | |

| (5-shot, finetuned, CoT + SC) | ||||||

| 5 | Flan-U-PaLM 540B | 74.1 | 540 | 2022 | fine-tuned | |

| 6 | Flan-PaLM | 72.2 | 540 | 2022 | fine-tuned | |

| (5-shot, finetuned) | ||||||

| 7 | Codex + REPLUG LSR | 71.8 | 2023 | few-shot | ||

| (few-shot, k=5) | ||||||

| 8 | Codex + REPLUG | 71.4 | 2023 | few-shot | ||

| (few-shot, k=5) | ||||||

| 9 | Flan-PaLM 540B | 70.9 | 540 | 2022 | fine-tuned | |

| (CoT) | ||||||

| 10 | U-PaLM | 70.7 | 540 | 2022 | few-shot | |

| (few-shot, k=5) | ||||||

| 11 | Flan-PaLM | 70.2 | 540 | 2022 | fine-tuned | |

| (5-shot, finetuned, CoT) | ||||||

| 12 | GPT-3.5 | 70 | 2023 | few-shot | ||

| (few-shot, k=5) | ||||||

| 13 | Flan-U-PaLM | 69.8 | 540 | 2022 | fine-tuned | |

| (CoT) | ||||||

| 14 | PaLM 540B | 69.3 | 540 | 780 | 2022 | few-shot |

| (few-shot, k=5) | ||||||

| 15 | LLaMA 65B | 68.9 | 65 | 1400 | 2023 | fine-tuned |

| (fine-tuned) | ||||||

| 16 | LLaMA 2 70B | 68.9 | 70 | 2023 | ||

| (few-shot, k=5) | ||||||

| 17 | Codex | 68.3 | 175 | 2023 | few-shot | |

| (few-shot, k=5) | ||||||

| 18 | Chinchilla | 67.5 | 70 | 1400 | 2022 | few-shot |

| (few-shot, k=5) | ||||||

| 19 | Flan-cont-PaLM | 66.1 | 62 | 2022 | ||

| 20 | LLaMA 65B | 63.4 | 65 | 1400 | 2023 | few-shot |

| (few-shot, k=5) | ||||||

| 21 | Flan-cont-PaLM | 62 | 540 | 2022 | ||

| (CoT) | ||||||

| 22 | Gopher | 60.0 | 280 | 300 | 2021 | few-shot |

| (few-shot, k=5) | ||||||

| 23 | Flan-PaLM 62B | 59.6 | 62 | 2022 | ||

| 24 | LLaMA 33B | 57.8 | 33 | 1400 | 2023 | |

| (few-shot, k=5) | ||||||

| 25 | Flan-PaLM 62B | 56.9 | 2022 | fine-tuned | ||

| (CoT) | ||||||

| 26 | Flan-T5-XXL | 55.1 | 11 | 2022 | ||

| 27 | GPT-3 | 53.9 | 175 | 300 | 2020 | fine-tuned |

| (fine-tuned) | ||||||

| 28 | GAL 120B | 52.6 | 120 | 450 | 2022 | zero-shotfew-shot |

| (zero-shot) | ||||||

| 29 | Flan-T5-XL | 52.4 | 3 | 2022 | ||

| 30 | Flan-PaLM 8B | 49.3 | 8 | 2022 | ||

| 31 | UnifiedQA | 48.9 | 11 | 2020 | fine-tuned | |

| 32 | Flan-T5-XXL | 48.6 | 2022 | |||

| (CoT) | ||||||

| 33 | Atlas | 47.9 | 11 | 2022 | ||

| (few-shot, k=5) | ||||||

| 34 | LLaMA 13B | 46.9 | 13 | 2023 | ||

| (few-shot, k=5) | ||||||

| 35 | Flan-T5-XL | 45.5 | 2022 | |||

| (CoT) | ||||||

| 36 | Flan-T5-Large 780M | 45.1 | 2022 | |||

| 37 | GLM-130B | 44.8 | 2022 | |||

| 38 | GPT-3 175B | 43.9 | 2020 | few-shot | ||

| (few-shot, k=5) | ||||||

| 39 | GPT-3 6.7B | 43.2 | 6.7 | 2020 | fine-tuned | |

| (fine-tuned) | ||||||

| 40 | Flan-PaLM 8B | 41.3 | 2022 | |||

| (CoT) | ||||||

| 41 | Flan-T5-Large | 40.5 | 2022 | |||

| (CoT) | ||||||

| 42 | Bloomberg GPT | 39.18 | 2023 | |||

| (few-shot, k=5) | ||||||

| 43 | BLOOM 176B | 39.13 | 176 | 2023 | ||

| (few-shot, k=5) | ||||||

| 44 | OPT 66B | 35.99 | 66 | 2023 | ||

| (few-shot, k=5) | ||||||

| 45 | GPT-NeoX | 35.95 | 2023 | |||

| (few-shot, k=5) | ||||||

| 46 | Flan-T5-Base 250M | 35.9 | 2022 | |||

| 47 | LLaMA 7B | 35.1 | 7 | 2023 | ||

| (few-shot, k=5) | ||||||

| 48 | Flan-T5-Base | 33.7 | 2022 | fine-tuned | ||

| (CoT) | ||||||

| 49 | GPT-NeoX-20B | 33.6 | 20 | 300 | 2022 | few-shot |

| (few-shot, k=5) | ||||||

| 50 | GPT-2 1.5B | 32.4 | 1.5 | 300 | 2019 | fine-tuned |

| (fine-tuned) | ||||||

| 51 | Gopher-7.1B | 29.5 | 7.1 | 300 | 2021 | few-shot |

| (few-shot, k=5) | ||||||

| 52 | Flan-T5-Small 80M | 28.7 | 2022 | |||

| 53 | GPT-NeoX-20B | 28.6 | 20 | 300 | 2022 | zero-shot |

| (zero-shot) | ||||||

| 54 | RoBERTa | 27.9 | 0.354 | 2019 | fine-tuned | |

| (fine-tuned) | ||||||

| 55 | GPT-J-6B | 27.3 | 6 | 300 | 2021 | zero-shot |

| (zero-shot) | ||||||

| 56 | Gopher-1.4B | 27.3 | 1.4 | 300 | 2021 | few-shot |

| (few-shot, k=5) | ||||||

| 57 | ALBERT | 27.1 | 0.031 | 2019 | fine-tuned | |

| (fine-tuned) | ||||||

| 58 | GPT-3 13B | 26 | 13 | 2020 | few-shot | |

| (few-shot, k=5) | ||||||

| 59 | GPT-3 2.7B | 25.9 | 2.7 | 2020 | few-shot | |

| (few-shot, k=5) | ||||||

| 60 | Gopher-0.4B | 25.7 | 0.4 | 300 | 2021 | few-shot |

| (few-shot, k=5) | ||||||

| 61 | Random Baseline | 25.0 | 2020 | |||

| 62 | GPT-3 6.7B | 24.9 | 6.7 | 2020 | few-shot | |

| (few-shot, k=5) | ||||||

| 63 | Flan-T5-Small | 12.1 | 2022 | |||

| (CoT) | ||||||

| 64 | GPT-3 175B | 175 | 2020 | |||

| (few-shot, k=5) | ||||||

| 65 | Minerva 540B-maj1@16 | 540 | 2022 | few-shot | ||

| (few-shot, k=5) | ||||||

| 66 | Minerva 540B | 540 | 2022 | few-shot | ||

| (few-shot, k=5) | ||||||

| 67 | Minerva 62B-maj1@16 | 62 | 2022 | few-shot | ||

| (few-shot, k=5) | ||||||

| 68 | Minerva 62B | 62 | 2022 | few-shot | ||

| (few-shot, k=5) | ||||||

| 69 | Minerva 8B-maj1@16 | 8 | 2022 | few-shot | ||

| (few-shot, k=5) | ||||||

| 70 | PaLM 62B | 62 | 2022 | few-shot | ||

| (few-shot, k=5) | ||||||

| 71 | Minerva 8B | 8 | 2022 | few-shot | ||

| (few-shot, k=5) | ||||||

| 72 | PaLM 8B | 8 | 2022 | few-shot | ||

| (few-shot, k=5) | ||||||

| 73 | Flan-T5-Small | 80 | 2022 | |||

| 74 | Flan-T5-Base | 250 | 2022 | |||

| 75 | Flan-T5-Large | 780 | 2022 |