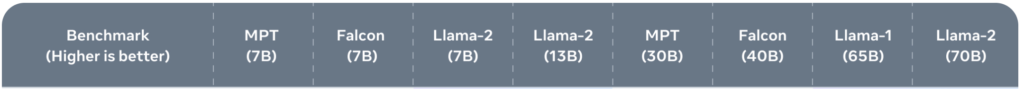

Parmi les nombreux benchmarks retenus pour comparer les performances de Llama 2 à ses concurrents, Natural Questions est le troisième cité dans l’article de présentation du logiciel.

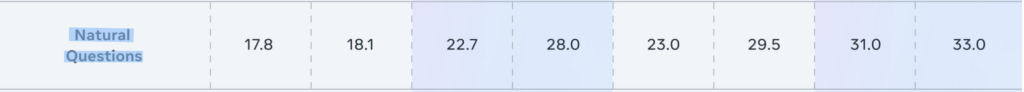

Note de Llama 2 / Natural Questions

La note obtenue par Llama 2 pour ce benchmark est de 33 pour la version 70B, ce qui le classe en 1ère position de la liste présentée.

ChatBot n’a pas été testé. GPT-3 175B a été testé en few shots. Son score est de 29.9. Il semble que Llama 2 ait la meilleure note des LLM testés en one shot, mais en few shots les résultats des autres LLM sont meilleurs.

Description de Natural Questions

We present the Natural Questions corpus, a question answering data set. Questions consist of real anonymized, aggregated queries issued to the Google search engine. An annotator is presented with a question along with a Wikipedia page from the top 5 search results, and annotates a long answer (typically a paragraph) and a short answer (one or more entities) if present on the page, or marks null if no long/short answer is present. The public release consists of 307,373 training examples with single annotations; 7,830 examples with 5-way annotations for development data; and a further 7,842 examples with 5-way annotated sequestered as test data.

https://aclanthology.org/Q19-1026/

Classement de Llama 2 / ses concurrents

| Rank | Model | EM |

|---|---|---|

| 1 | Atlas | 64 |

| (full, Wiki-dec-2018 index) | ||

| 2 | Atlas | 60,4 |

| (full, Wiki-dec-2021+CC index) | ||

| 3 | FiE | 58,4 |

| 4 | R2-D2 | 55,9 |

| (full) | ||

| 5 | ReAtt | 54,7 |

| 6 | FiD-KD | 54,7 |

| (full) | ||

| 7 | EMDR^2 | 52,5 |

| 8 | FID | 51,4 |

| (full) | ||

| 9 | RETRO + DPR | 45,5 |

| (full) | ||

| 10 | Codex + REPLUG LSR | 45,5 |

| (Few-Shot) | ||

| 11 | Atlas | 45,1 |

| (few-shot, k=64, Wiki-Dec-2018 index) | ||

| 12 | Codex + REPLUG | 44,7 |

| (Few-Shot) | ||

| 13 | RAG | 44,5 |

| 14 | Atlas | 42,4 |

| (few-shot, k=64, Wiki-dec-2021+CC index) | ||

| 15 | DPR | 41,5 |

| 16 | REALM | 40,4 |

| 17 | LLaMA 65B | 39,9 |

| (few-shot, k=64) | ||

| 18 | PaLM-540B | 39,6 |

| (Few-Shot, k=64) | ||

| 19 | PaLM 2-L | 37,5 |

| (one-shot) | ||

| 20 | Chinchilla | 35,5 |

| (few-shot, k=64) | ||

| 21 | LLaMA 65B | 35 |

| (few-shot, k=5) | ||

| 22 | LLaMA 2 70B | 33 |

| (one-shot) | ||

| 23 | GLaM 62B/64E | 32,5 |

| (Few-Shot) | ||

| 24 | PaLM 2-M | 32 |

| (one-shot) | ||

| 25 | LLaMA 65B | 31 |

| (one-shot) | ||

| 26 | GPT-3 175B | 29,9 |

| (Few-Shot, k=64) | ||

| 27 | PaLM-540B | 29,3 |

| (One-Shot) | ||

| 28 | Gopher | 28,2 |

| (few-shot, k=64) | ||

| 29 | GLaM 62B/64E | 26,3 |

| (One-Shot) | ||

| 30 | PaLM 2-S | 25,3 |

| (one-shot) | ||

| 31 | LLaMA 33B | 24,9 |

| (zero-shot) | ||

| 32 | GLaM 62B/64E | 24,7 |

| (Zero-Shot) | ||

| 33 | PaLM-540B | 21,2 |

| (Zero-Shot) | ||

| 34 | Neo-6B | 19,7 |

| (QA) | ||

| 35 | Neo-6B | 19,6 |

| (QA + WS) | ||

| 36 | Neo-6B | 13,7 |

| (Few-Shot) |